For the past few days the Internet has been all aflutter about

Google Buzz, some saying it's a Twitter killer. Google Buzz is not like Twitter. Rather, it is like

FriendFeed. I have used FriendFeed for quite some time to aggregate

all of my online content into a single stream, and Google Buzz is designed to do exactly the same thing.

Like FriendFeed, Google Buzz consumes Twitter and other content-generators, that is, you can have your Twitter posts show up on either service, as well as you blog posts, your online photos, forum comments, and so forth. Content originates in multiple places, but these services enable it to all come together in one place specific to the person who created it.

Now, I have no problem with Google creating their own FriendFeed and then foisting it on all Gmail users. I think it's a great idea. My mother, for example, will never set up an account with FriendFeed, and she hasn't quite figured out Google Reader, but she might just try out this Buzz thing in her Gmail inbox, there she might see my latest tweet, or blog post, or photo album, or shared article.

Now, some of this stuff she already sees, since I import my Twitter updates and blog posts into Facebook, and so in a way, Google Buzz is competing with Facebook.

Online Identity

When you activate Buzz in your Google account, they let you know that they are making your Google Profile public, and that that includes your first and last name.

As it happens, I have gone through the trouble of NOT directly associating any of my public online content with my real name. That way if you "Google" my name, you don't find all of my content (insert horror story here about prospective employers finding something they don't like or disagree with).

My real name is of course associated with my content inside of Facebook, but that content-name link is only available to my friends. Most of the same content is available outside of Facebook, but it is tied to "burndive", not my name.

Google's

corporate mission is "to organize the world's information and make it universally accessible and useful". This is no doubt why they are pushing for people to publicly link their full real name with all of their online content.

Well, I'm not going to be pushed.

For many people who do not maintain a barrier online between their friends and the public, this will not be an issue, but for me it is.

My Google account is used as my primary e-mail address. I want my name to be associated with my e-mail address to my contacts, so I can't simply change my name to a pseudonym on my Google Profile, and it would be extremely disruptive for me to switch to another Google account.

Google is obviously aware of people in my situation, because they already have a feature in the Google Profile called a "nickname". Anyone on my contacts list will see my real name on my profile, everyone else will see my nickname. This is how it works with Google Reader shared items, and it's a very good system. They just don't want to use it with Google Buzz.

The Problem

I went ahead and added my blogs, Google Reader, my Twitter account, and my Picasa Web account to Google Buzz, but nothing was being imported except Google Reader.

I looked further into the matter, and it turns out that it wasn't importing my content because after signing up for Buzz, I realized what had happened, and had restored my profile privacy settings. "That's logical", I thought, "They won't let me post publicly because my name isn't public. I'll just change the import settings so only my friends and family see the posts. They can already see my name." No dice.

So what was up with Google Reader? Google Reader has separate privacy settings, as it turns out, but, as I discovered, it will STILL share your full name on the posts and make it visible to the world.

So, after a brief stint, I have turned off Google Buzz. I never really intended to consume content there, but I had hoped it would be a venue for others to consume my content who would otherwise not occasion to see it, and a user-friendly comment forum.

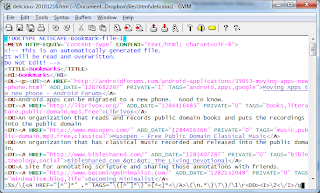

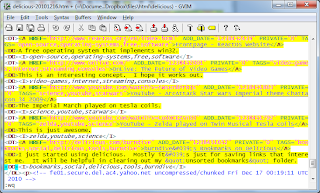

If it did, you would at least be able to open the web page and search for a specific tag. The tags are there in the HTML source, but they aren't visible on the page.

If it did, you would at least be able to open the web page and search for a specific tag. The tags are there in the HTML source, but they aren't visible on the page.